What’s your AI strategy? If you are a revenue leader right now, it is hard to avoid this question. But getting to a clear and actionable answer is not so straightforward.

That’s why we’ve created 3 full playbooks to the process of identifying, organizing, and integrating AI solutions into your revenue workflow.

To recap:

Playbook 1: How to create an AI “habit loop” in your revenue process

Playbook 2: How to plug revenue leaks with cutting-edge tech

Today, we’re talking about a final key ingredient in making your AI strategy a success.

The Human Element of AI Adoption

As magical as some of these new AI technologies may seem, at this point they are anything but “plug and play” when it comes to transforming real business processes.

Ideally, you’d identify the biggest source of revenue leak, point AI at it and watch your problems disappear. But there’s another factor you need to consider before pressing the “go” button.

Trust.

More than any other technology applied to the B2B revenue process in recent years, successful deployment of AI requires building trust.

It’s almost more like hiring a new member (or many members) of your revenue organization. When you onboard a new employee, you carefully design their onboarding plan to allow them to tackle more complex and consequential tasks as they get up to speed.

Successful deployment of your AI strategy should look similar. Let’s do a double click on how to progressively build trust as you roll out AI for running revenue.

Clearing the “Trust Bar”

There’s a simple test you can use for evaluating where a particular use case should fit into your AI roadmap– I call it The Trust Bar.

Simply put, different use cases require different levels of trust in AI. The more trust required, the higher the trust bar. You have to pick AI use cases that are appropriate for where any given user, team or organization stands on their journey of building trust in AI.

Picking applications with a trust bar that you can’t yet clear is a recipe for fear and pushback that ultimately slows your progress toward successful AI adoption. On the other hand, failing to progress beyond the low trust use cases will lead to underwhelming results.

One of the reasons that AI-powered meeting summarization got so much traction as an early use case for GenAI is that it has an inherently low trust bar. Meeting summaries don’t have to be 100% accurate to be useful, and the stakes are low if AI gets a few details wrong. And nobody wants to spend hours per week on meeting follow-ups.

At the same time, many organizations are stuck at use cases like meeting summarization when it comes to AI adoption.

The takeaway? Start with AI use cases that require a low level of trust (it’s never too soon to start building those AI habit loops we discussed in Playbook 1). Then, raise the bar and take on more complex use cases as organizational trust increases.

How to Determine Trust Levels

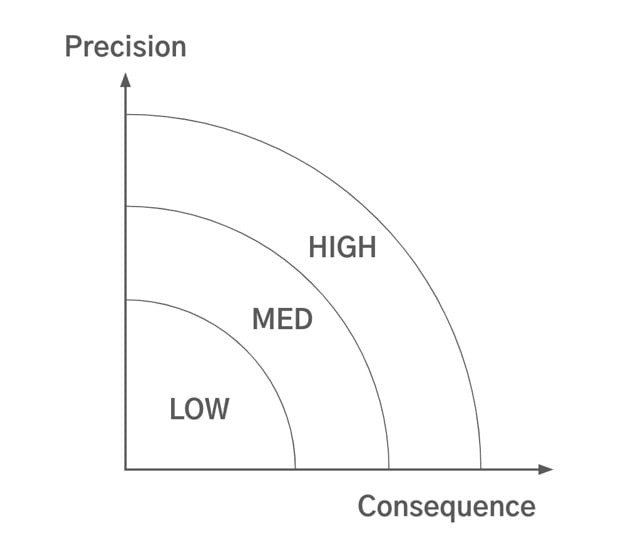

To figure out where a particular use case falls on the trust spectrum, consider two criteria:

- Precision. How precise does AI need to be in order to provide value?

- Consequence. If AI gets something wrong, what is the potential cost? That could be relationship cost, financial cost, or reputational cost, among others.

By plotting use cases on a map consisting of these two criteria, you can put them into one of three categories:

- Low Trust.

These use cases do not require a high level of precision and are likely purely internal facing. They’re great for testing and building familiarity. Example: meeting summarization.

- Moderate Trust.

These use cases require more precision and may ultimately have external impact, but with a human in the loop. They provide an opportunity to start testing how much automation can be trusted. Example: automated CRM updates

- High Trust.

These use cases entrust impactful external-facing tasks entirely to AI-powered automation. These cases are where a lot of the potential efficiency gains of AI lie, but unfortunately, we can’t skip to the end. Example: fully automated sales prospecting

The key is to know where you are in your journey of AI adoption, and pick your target use cases accordingly. Remember that different teams (and even individuals) may be at different stages.

While it is easy to imagine what can go wrong by going after high trust use cases too early, I’ll turn to Michelangelo to remind us that the inverse may be even more risky:

“The greater danger for most of us lies not in setting our aim too high and falling short; but in setting our aim too low and achieving our mark.”

Start Small, Think Big

In summary, here’s a simple way to think about building trust progression into your AI roadmap:

Focus on low-hanging fruit to build trust. Keep your eyes on the long-term vision for AI.

This “start small, think big” approach will enable you to build sufficient organizational trust without getting stuck in the land of “toy” applications.

As trust grows, so does your opportunity to take full advantage of AI’s game-changing potential.